Executive Summary

Across Europe, organizations are rethinking their technology strategies in response to new regulations, rising cloud costs, and a growing focus on digital sovereignty. Rather than relying solely on closed ecosystems or large hyperscale providers, many are exploring alternative approaches that balance flexibility, control, and sustainability.

Open-source technologies play an important role in this shift, offering transparency, adaptability, and independence from vendor lock-in. At the same time, managed cloud services continue to provide speed, reliability, and convenience that are difficult to match. The most effective strategies often blend both worlds.

This article examines the evolving landscape of modern data platforms, from open-source foundations to managed and hybrid models, and highlights what makes them professional: clear governance, strong automation, and secure operations. It reflects Aurai’s experience helping organizations navigate these choices and align their data platforms with long-term business and AI strategy.

The European Open-Source Context

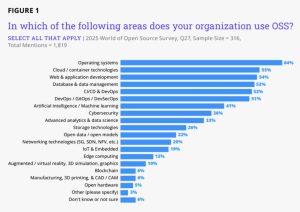

Across Europe, digital sovereignty and regulatory frameworks increasingly influence technology choices. The 2025 World of Open Source: Europe Spotlight report highlights that open source offers transparency, flexibility, and local control, making it a strategic consideration for public and private organizations alike.

Still, the choice between open source and managed platforms is rarely binary. The most sustainable data strategies typically combine open-source flexibility with cloud-native reliability, tailored to each organization’s needs, risk profile, and operational maturity.

Figure 1 – Open-Source Adoption in AI & Analytics (Linux Foundation Europe, 2025)

The Modern Open-Source Stack

Every data team eventually faces the same question: how much control do we really want over our platform?

For some, managed cloud services offer the speed and simplicity they need. For others, open-source tools provide the freedom to adapt, optimize, and grow on their own terms.

When done well, open source can feel just as seamless as any commercial solution, with the added advantage of transparency and flexibility.

At Aurai, we have seen that success with open source depends less on which tools you pick and more on how you combine them. The real art lies in turning individual components into one coherent, professional-grade ecosystem.

It starts with data ingestion, the moment information enters the platform. Airbyte connects to APIs, SaaS tools, and databases without the recurring costs or limitations of proprietary connectors. It makes data movement visible and adaptable, so you can inspect every step and tune it as business needs evolve.

Once the data lands, it needs a reliable home. MinIO provides secure, S3-compatible storage that can run anywhere, from private data centers to sovereign clouds. It keeps data within your control while remaining compatible with the entire open-source analytics landscape.

To coordinate everything, Apache Airflow serves as the conductor. It automates the choreography of ingestion, transformation, and reporting. Because workflows are defined as code, engineers can review, version, and improve them just like software.

Transformation is where raw data becomes insight. dbt Core takes familiar SQL and turns it into maintainable, version-controlled logic with built-in testing and documentation. For teams that care about governance and quality, dbt brings order and accountability to the analytics layer.

Then comes performance. Databend and ClickHouse handle analytical workloads with remarkable speed and scalability. Databend offers cloud-native elasticity by separating compute from storage, while ClickHouse shines in large-scale OLAP scenarios and real-time dashboards.

Finally, visibility ties it all together. Grafana helps both engineers and decision-makers see what is happening, not only in the data but also in the platform itself. It becomes the single window into business KPIs, operational metrics, and infrastructure health.

For many organizations, the perfect setup is not purely open source or purely managed. The most resilient solutions combine both, blending open-source flexibility with the convenience of cloud services. What matters most is intentional design, understanding where to keep control and where to delegate.

Open-Source Tools and Their Common Counterparts

| Data Lifecycle Stage | OSS Tool | Commercial/ Managed Counterpart | Primary Focus / Distinction |

| Ingestion | Airbyte | Fivetran, Stitch | Flexible, self-hosted connectors without subscription costs. |

| Storage / Data Lake | MinIO | Amazon S3, Azure Blob, Google Cloud Storage | S3-compatible object storage under full control. |

| Orchestration | Apache Airflow | Azure Data Factory, AWS Step Functions, Google Cloud Composer | Code-based workflow automation and transparency. |

| Transformation | dbt Core | Databricks SQL Transformations, Google Dataform | SQL-driven modeling with version control and testing. |

| Analytical Engine (Lakehouse) | Databend | Snowflake, BigQuery | Cloud-native elasticity without licensing overhead. |

| Analytical Engine (OLAP) | ClickHouse | Azure Synapse, Amazon Redshift | Extremely fast analytical queries for large datasets. |

| Visualization & Observability | Grafana | Power BI, Looker, Tableau | Unified dashboards for business KPIs and platform health. |

Hosting Choices

Choosing where to host your data platform is rarely a technical decision alone. It’s also about trust, control, and how much responsibility your team wants to take on. Over the past few years, we’ve seen three main paths emerge, each with its own balance between convenience and autonomy.

Most organizations begin in the public cloud, taking advantage of managed services and infrastructure from providers such as AWS, Azure, or Google Cloud. These platforms make it easy to combine native tools like Data Factory, Glue, or BigQuery with containerized environments such as Kubernetes. The public cloud offers rapid setup, scalability, and global availability, allowing data teams to focus on delivering insights rather than maintaining hardware. The trade-off is dependency on the vendor ecosystem, which can affect cost predictability and long-term flexibility.

At the other end of the spectrum are organizations that decide to self-host their data platforms. This can take the form of a private cloud managed within their own datacenter or through a trusted local partner. In many cases, these setups are powered by on-premises Kubernetes distributions such as RKE2 or K3s. Running your own environment offers maximum control and predictability, which is valuable for workloads with strict security or compliance requirements. However, it also demands a mature DevOps culture and continuous operational effort.

A smaller but growing number of organizations are beginning to explore European cloud providers, motivated by digital sovereignty and data-residency regulations. Companies such as OVHcloud and Scaleway are emerging as potential alternatives to the major hyperscalers, although adoption remains limited. For now, most teams continue to rely on the global cloud platforms while monitoring the progress of European ecosystems closely.

In reality, most organizations operate in a hybrid landscape that has evolved organically. Legacy systems, cloud services, and on-prem workloads coexist out of necessity rather than by design. The challenge is to bring consistency, governance, and cost control to this mixed environment. At Aurai, we help organizations turn that complexity into structure, ensuring that every component, regardless of where it runs, contributes to a coherent, secure, and scalable data platform.

Security by Design

No matter where a data platform is hosted, security has to be part of its foundation, not an afterthought. The challenge with open and modular architectures is that responsibility is shared across many layers — infrastructure, network, storage, and even the transformation logic itself. Designing for security means creating clear boundaries and predictable behaviors from day one.

It starts with access control. Every component in the platform, from Airbyte to Grafana, should respect role-based access control (RBAC). This ensures that engineers, analysts, and applications only see the data and resources they actually need.

Next comes secret management. Credentials and API keys should never live inside code or configuration files. Instead, they can be managed securely through Kubernetes Secrets or tools like the External Secrets Operator, which synchronizes with cloud secret managers.

Network isolation plays an equally important role. By defining Kubernetes NetworkPolicies, internal traffic can be restricted so that only approved services can communicate with each other. This reduces the risk of accidental data exposure and keeps workloads contained even if something goes wrong.

User authentication is another cornerstone. Integrating single sign-on through OIDC allows the same identity provider to govern access across all tools. Whether that is Cognito, Authentik, or Keycloak, the goal is consistency: one login, one source of truth, and one audit trail.

Data itself must also be protected. Encrypting storage buckets in MinIO and enforcing TLS for every endpoint ensures that information remains secure both at rest and in transit. And when it comes to resilience, Velero with CSI snapshots can handle backup and recovery in a way that fits naturally into Kubernetes.

In the end, security is less about individual tools and more about a mindset. A professional platform is one that expects failure, plans for it, and continues to operate safely when it happens.

Scaling

One of the biggest strengths of modern data platforms is that they can grow organically. You do not have to overprovision from day one. Kubernetes makes this possible by allowing every part of the system, from ingestion pipelines to analytics workloads, to scale independently based on demand.

A good scaling strategy starts with separation of workloads. Ingestion jobs, transformation tasks, and analytical queries all behave differently. By assigning them to dedicated node pools, each can scale according to its own performance profile. This prevents ingestion from slowing down analytics, and vice versa.

Kubernetes also brings automatic elasticity through the Horizontal Pod Autoscaler. When more data arrives or more users query dashboards, the cluster responds by spinning up extra pods. When activity drops, it scales back down. This keeps performance steady while avoiding unnecessary costs.

For persistent workloads such as databases or lakehouse engines, StatefulSets help maintain data consistency even as nodes are replaced or pods are restarted. Combined with dynamic volume provisioning, they ensure that critical storage remains stable across scaling events.

Equally important is configuration management. In professional environments, every deployment and change should be defined declaratively, often through Helm charts and managed with GitOps tools like ArgoCD. This approach allows teams to reproduce environments, roll back easily, and maintain transparency in how the platform evolves.

The goal is not just to handle growth, but to do it predictably. Scaling should feel invisible to the end user. Dashboards remain responsive, ingestion continues on schedule, and costs stay under control. When Kubernetes scaling works well, the platform simply adjusts itself quietly, efficiently, and without interruption.

Challenges and Lessons Learned

Building a professional open-source data platform is rewarding, but it is rarely straightforward. The individual components are powerful, yet each comes with its own defaults, dependencies, and quirks. Success depends on how well these pieces fit together, both technically and operationally.

One of the first lessons we learned is that consistency matters more than choice. There are often several right answers in the open-source world, but mixing them without a clear strategy can create friction. Helm charts, for example, are not always aligned across projects. Small configuration differences can lead to unexpected behaviors when components start interacting.

Versioning is another recurring challenge. Each tool evolves at its own pace, which means upgrades have to be tested carefully before rollout. It is tempting to always chase the latest release, but stability usually comes from controlled change rather than constant innovation.

We also found that performance tuning is part of the journey. Balancing cost and speed takes time. Running everything on maximum settings might look impressive at first, but it quickly becomes expensive. Careful benchmarking helps reveal the sweet spot between responsiveness and efficiency.

Documentation and automation deserve special attention. In fast-moving projects, it is easy for documentation to lag behind the latest implementation. Keeping code, infrastructure, and knowledge in sync requires discipline and a culture of sharing. The more teams automate, from deployments to testing, the more likely they are to maintain that alignment.

Perhaps the most valuable insight is that professionalism in open source comes from process, not just technology. Clear ownership, defined release cycles, and proactive monitoring turn a collection of tools into a reliable platform. It is the maturity of the team around the technology that ultimately determines its success.

What Makes It Professional

After working with many different architectures and technologies, one thing becomes clear: professionalism is not defined by the tools you choose, but by how you use them. A data platform becomes professional when it behaves predictably, scales reliably, and supports the business with confidence.

It starts with reproducibility. Every part of the environment should be defined as code, from the infrastructure to the transformations. Using tools such as Helm and GitOps ensures that deployments are not one-off actions but repeatable processes. This makes recovery, testing, and scaling far simpler.

Then comes security and resilience. A professional platform assumes that things can go wrong and is built to handle that gracefully. Encryption, role-based access control, and automated backups are not optional extras. They are the safeguards that keep data secure and operations stable.

Visibility is another crucial trait. Through observability, teams can see how the platform behaves, where performance changes, and when data quality drifts. Dashboards and alerts turn potential surprises into manageable signals.

Continuous testing and validation are equally important. With CI/CD pipelines and dbt tests, every change to the platform can be verified before it reaches production. This discipline creates trust, both within the data team and with the stakeholders who rely on the insights produced.

Finally, a professional platform is governed and adaptable. It documents its data lineage, defines ownership, and can evolve without redesign. Each component can be replaced or upgraded without disrupting the whole.

In short, professionalism is not a fixed state. It is a way of working that values stability, transparency, and improvement over time. When these principles guide how a platform is designed and maintained, technology becomes an enabler instead of a risk.

Final Reflection

In 2025, open-source data platforms have matured into a credible and strategic option for organizations that value sovereignty, flexibility, and long-term sustainability. Yet, they are not the only path. Many organizations continue to thrive on managed or hybrid platforms that offer convenience, integrated tooling, and strong vendor support.

The real question is not whether open source is ready, but whether it aligns with the strategy and capabilities of your organization. The best choice depends on what you value most: control, scalability, compliance, or simplicity. What matters is making that choice deliberately and building the processes and governance to support it.

At Aurai, we help organizations evaluate these trade-offs and design data platforms that fit their goals, whether they are built on open-source foundations, cloud-native services, or a mix of both. With the right architecture, mindset, and guidance, every organization can create a platform that is not only secure and scalable but also truly its own.

Interested in designing a data platform that works for your organization? Get in touch with our team at info@aurai.com to discuss your data strategy and architecture vision.

Sources & References

Cailean Osborne & Adrienn Lawson, “Open Source as Europe’s Strategic Advantage: Trends, Barriers, and Priorities for the European Open Source Community amid Regulatory and Geopolitical Shifts,” The Linux Foundation Europe, August 2025. Licensed under CC BY-ND 4.0.