Natural Language Processing

The field of NLP covers the interactions between humans and computers, and focuses on how to help programs understand language data by incorporating the differences between computer and human communication. When a computer is able to (nearly) understand the details and contextual nuances of language, we can then put it into use by extracting insights and information from texts.

While text mining and NLP both tackle textual data, there are some differences between them. Text mining focuses on how to retrieve information from text data and discover patterns within those texts, while NLP tries to understand and replicate language. Thus, the most important difference lies within the complexity of their understanding of the texts they analyze. Text mining extracts details and information from the text data but lacks any understanding of the information in those texts. NLP builds a more thorough understanding of texts by employing techniques that dive into the grammatical and semantic properties.

Those techniques can develop larger programs, such as the use of search engines, intelligent chatbots, and spellcheck applications. Of the many techniques and methods, in this blog, we will be discussing embeddings, topic modeling, and transformer-based language models.

Embeddings: Converting Words

Interpreting the contextual meaning of specific words within a sentence or text is a constant challenge when working with text data. As discussed in our earlier blog, humans have a lot of context learned and ingrained before we read a text. A computer program often doesn’t have that luxury. The technique of word embeddings can help clarify ambiguities.

Word embeddings can be seen as numerical representations of words ordered into a large number of dimensions. It transforms the data from text, words and sentences in the form of strings into a generic numerical vector. Instead of comparing individual words, the computers can compare the numerical representation of those words, thus tailoring processing to the computer’s strengths.

Numeral Representation for Words

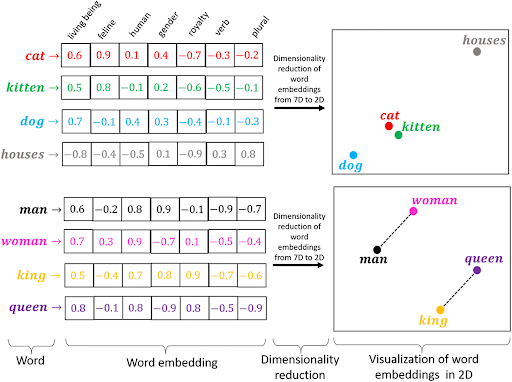

We start by scoring each word in several different categories based on the surrounding words to get an embedding for each word in the text. To help visualize this, let’s use the word ‘cat’ from the sentence ‘There is a cat sitting in a tree’. Now, let’s give it a score between -1 and 1 labeled from several categories. For the category animals it will score 0.9, for the category food it will score 0.2, and for the category Christmas it will score -0.7. Now repeat this for at least a couple hundred categories (or dimensions), and then repeat for each word in each sentence. Eventually you’ll end up with a numerical representation for each word. This helps to compare the numerical representation, or vectors, with each other (see Figure 1 below).

If we automate this, and scale it up, we can create vectors for many words. The vectors are scored using the surrounding words as context, so essentially the behavior or meaning of each word is captured within the vector. Words with a similar context will be grouped or scored together. With the previous example in mind, the word ‘dog’ will probably behave similarly to the word ‘cat’, because of the surrounding words being similar. This will lead to similar words having a similar score. The similarity in behavior is even captured on a deeper level, with synonyms having similar scores. Very cool! This is all done using shallow neural networks. Word2vec is the most popular technique for this.

The word-embedding techniques convert words to a numerical representation and use that representation for comparison. This technique can extend to a larger scale, such as paragraphs or entire documents (doc2vec). This is much like word2vec, except the corresponding overarching text is used as a reference during program training. Instead of every word in each text being transformed into a vector, the entire text is being transformed into one single vector. This single vector can summarize the entire text’s information into one numerical representation. We need further techniques to accomplish summaries of an entire text.

Topic Modeling; Clustering Words

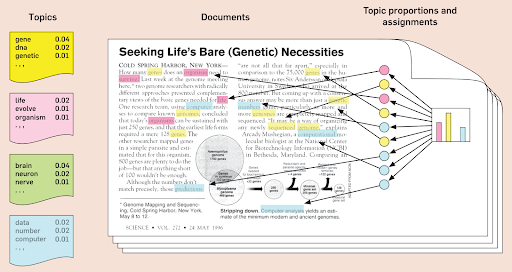

Techniques for applying embeddings are always based on words and how they interact with each other. Topic modeling looks at the compositions of words within texts based on several core concepts. All texts are composed of collections of words. By categorizing the words into different topics, each topic can represent a collection of words (and vice-versa).

The nuts and bolts of it: topic modeling is an unsupervised machine learning method that aims to detect common themes within documents based on the coherence of words. Topic modeling clusters the words into groups that are frequently used together. For example, a text about cats will often have words that are related to that subject, such as ‘kitten, cat, paw, fur, and meow’. By identifying these words, topic modeling can group texts containing these words with other texts signaling similar topics and can create a model that can score the topics within and across texts. One text never contains just one topic, a combination of topics exists in every text.

Topic modeling can quickly categorize or summarize texts. This will come in handy when there are many texts to analyze. For example, when receiving a lot of reviews in customer support, one could use topic modeling to separate the positive reviews from the negative reviews, and make decisions from the data analysis. It is useful in recommender systems where a topic model infers which texts you have read and liked, and recommends new texts.

Transformer-Based Language Models

The world of data is developing rapidly. Sometimes, one of those developments makes a big impact. The development of transformer-based models was one such development when Google introduced them in 2017. The tech giants develop cutting-edge technologies, and often they make it open source. If we incorporate the newest technologies when they come out, we can better tune them to our needs.

Transformer-based models have a wide range of applications across NLP, and outside of it as well (such as computer vision). These are some of the most complicated models out there, so we won’t go into every detail. Utilizing them is more challenging, but the results can be outstanding. These models can be trained on larger datasets than was previously ever possible. More parallelization in their data processing and training can be applied due to their advanced architecture. Their design led to pre-trained models being developed by incorporated tech companies such BERT (Google), BART (Facebook) and GPT (OpenAI). These pre-trained models are trained on large datasets, so already very useful on their own. A specific Python library called transformers is a great place to start. The open source opportunities allow us to finetune pre-trained models to fit our own use cases and program and engineer further.I

The possibilities of Natural Language Processing are endless. Transformers-based language models build summarization, translation, text classification, information extraction, question answering, and text generation in over 100 languages.

Beyond Clever Experiments

The incredible power of these language models was illustrated in an experiment by Liam Porr on the text generation capabilities of GPT-3, a transformer-based language model developed in 2020. He tuned the model in such a way that it would write a blog on its own, and then posted it online to see whether people would notice the difference. The blog was published and subsequently rose to the forum’s number one spot; the machine writing as a human was more popular.

These models have plenty of real-life applications beyond clever experiments. When you type into the google search engine, it will suggest the next word. Google Translate uses a transformer when you need to translate one language to another. This model is everywhere because it makes language computable. The rewards are high If you can manage to incorporate the complexity of a transformer-based language model into your systems.

Advanced Natural Language Processing

The three techniques discussed in this blog, although advanced, are just a small taste of the possibilities in the rapidly developing world of Natural Language Processing. We looked at the basics of word embeddings and topic modeling, and a prelude to the power of transformer-based language models. We hope this blog helps you gain more insights into matching skills with the increasing complexity and quantity of text data.